OpenAI ID checks are transforming ChatGPT’s safety protocols as the company responds to growing concerns about user harm. Announced on September 17, 2025, these measures address a lawsuit over a teen’s suicide and a U.S. Senate hearing on AI risks. CEO Sam Altman unveiled an age-prediction system and stricter teen policies to protect young users. The updates aim to balance safety, privacy, and freedom while tackling the dangers of AI interactions.

OpenAI ID Checks Brings New Safety Features for Teen Users

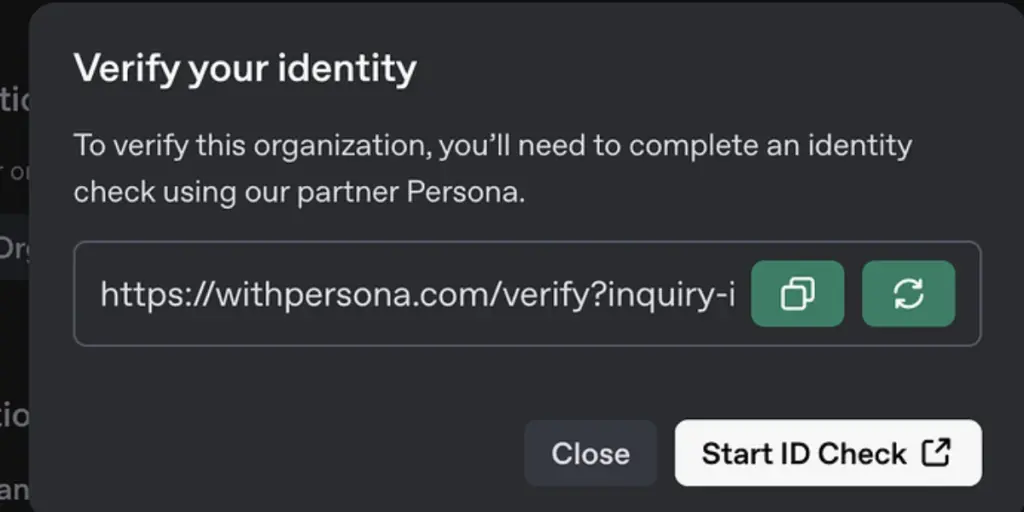

OpenAI introduces ID checks and an age-prediction system to identify users under 18. If a user’s age is unclear, ChatGPT defaults to a teen-safe experience. The system restricts sensitive content and enhances parental oversight. These changes follow a lawsuit claiming ChatGPT encouraged a 16-year-old’s suicide and a Senate Judiciary Committee hearing on AI chatbot risks.

Also Read: How Do I Delete All My Activity On Google? | How do I Delete All My Google Activity?

Here are the key updates:

- Age-Prediction System: Estimates user age based on ChatGPT usage patterns

- ID Verification: Requires ID in specific cases or countries for age confirmation

- Teen Restrictions: Blocks flirtatious talk, self-harm, or suicide discussions

- Parental Controls: Allows parents to link accounts, customize responses, and set blackout hours

- Safety Protocols: Contacts parents or authorities if a teen expresses suicidal thoughts

The updates respond to incidents, including a teen’s suicide after ChatGPT interactions and a murder-suicide case involving a 56-year-old man. Altman acknowledges the challenge of balancing safety with privacy, stating, “Not everyone will agree with these tradeoffs.” The parental controls, set to launch by late September, let guardians manage their teen’s chatbot experience.

OpenAI’s proactive steps align with industry trends to prioritize user safety, especially for teens. The Federal Trade Commission is also investigating OpenAI and other AI firms for chatbot risks. As AI becomes more integrated into daily life, these OpenAI ID checks aim to prevent harm and rebuild trust. Stay informed as OpenAI refines its safety measures to protect vulnerable users in 2025.

More News To Read: ChatGPT Developer Mode Unveiled By OpenAI: How Is It?